High-Level Planning in Robotics

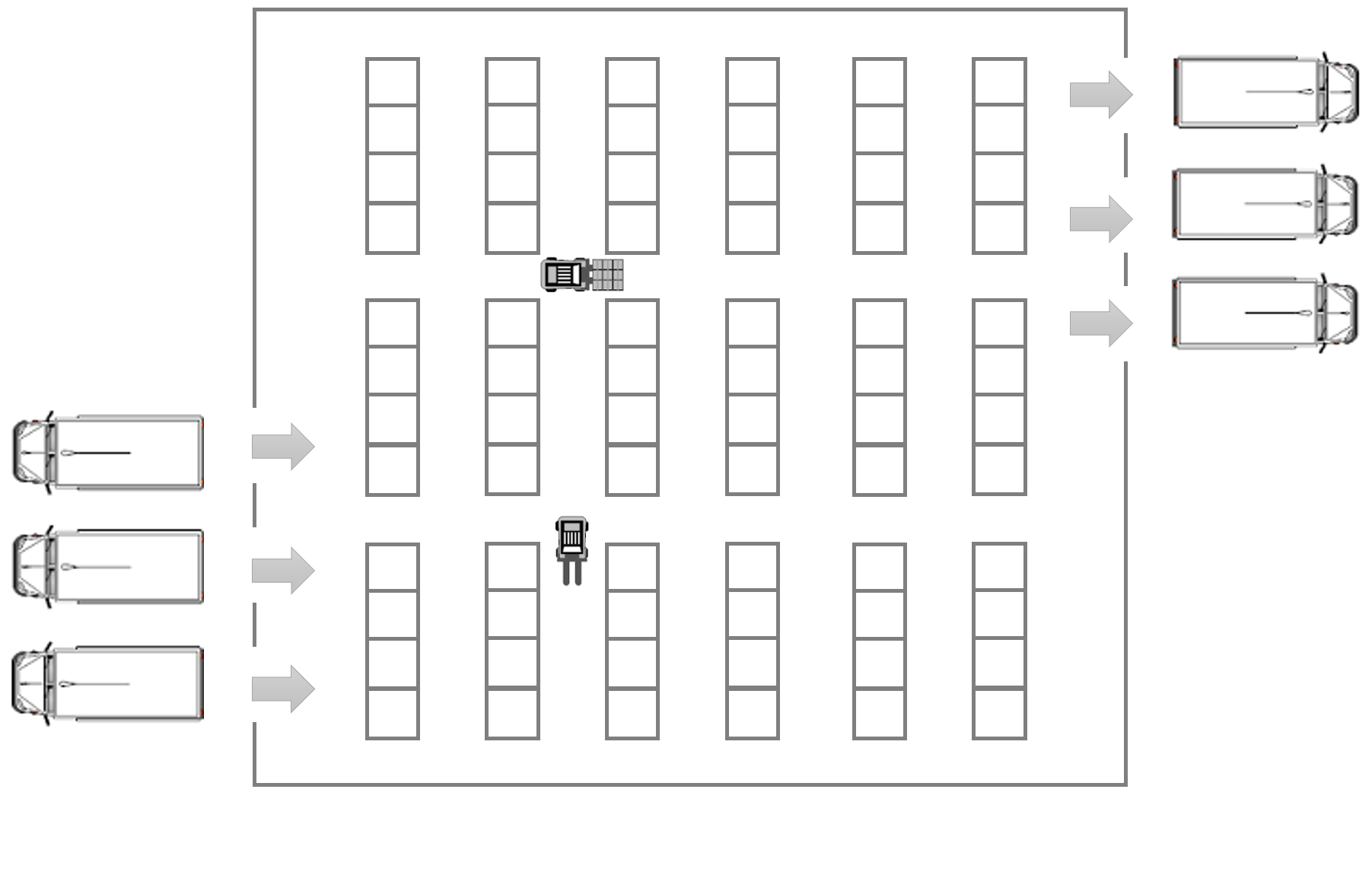

A classical application of reactive synthesis is the domain of automated high-level planning in robotics. We consider a warehouse floor plan with several shelves, see Figure 1. To parametrise the experiment, we consider floor plans with n * 3 shelves with 2 ≤ n ≤ 20. A robot operates together among other robots within the warehouse. TEMPEST can be used in this setting to synthesize controllers for the robot that perform certain tasks, as well as shields used to ensure safe operation of the robot, or to guarantee performance.

Optimal-Shield Synthesis

During operation, a corridor may be blocked. A robot should not unnecessarily wait for the corridor to be traversable when alternative paths exist. We synthesize an optimal-shield that penalizes 'waiting' and is able to enforce a detour when waiting gets too expensive.

Model Specification

We discuss the model given in Figure 2 in detail and describe the different sections of the code. The property used to synthesise the optimal shield is given in Figure 3.

The keyword smg defines the model to be a stochastic multiplayer game.

At first we define the actions controllable by each player with the player...endplayer struct. Square brackets indicate an action to be controlled by the described player. Simple strings add all unlabeled actions of the given module to the player struct.

The global variable move models the turn-based character of the coalition game. It is switched between the controller, the shield and the environment such that they move in a round-trip manner.

We then define the path lengths using an undefined constant lengthA. Furthermore we need multiple boolean variables that capture which plan is currently executed as well as whether the robot is waiting or has taken a step. The costs and the probability of having to wait at a given time step is given as well.

smg player sh [planA], [planA_Dev], [planB], [planB_Dev] endplayer player learned_controller controller, env endplayer global move : [0..2] init 0; const int lengthA; const int lengthB = lengthA + 5; global stepA : [0..lengthA] init 0; global stepB : [0..lengthB] init 0; global planA : bool init true; global shieldPlanA : bool init true; global stepTaken : bool init false; global waiting : bool init false; const int waiting_cost = 2; const double blockedProb = 0.5; module controller [] move=0 & shieldPlanA & stepA<lengthA -> (planA'=true) & (stepTaken'=false) & (waiting'=false) & (move'=1); [] move=0 & shieldPlanA & stepA<lengthA -> (planA'=false) & (stepTaken'=false) & (waiting'=false) & (move'=1); [] move=0 & !shieldPlanA & stepB<lengthB -> (planA'=false) & (stepTaken'=false) & (waiting'=false) & (move'=1); endmodule module shield [planA] move=1 & planA -> (shieldPlanA'=true) & (move'=2); [planB_Dev] move=1 & planA -> (shieldPlanA'=false) & (move'=2); [planB] move=1 & !planA -> (shieldPlanA'=false) & (move'=2); [planA_Dev] move=1 & !planA -> (shieldPlanA'=true) & (move'=2); endmodule module env [] move=2 & shieldPlanA& stepA<lengthA -> 1 - blockedProb : (stepA'=min(stepA+1, lengthA)) & (stepTaken'=true) & (move'=0) + blockedProb : (stepA'=stepA) & (waiting'=true) & (move'=0); [] move=2 & !shieldPlanA & stepB<lengthB -> (stepB'=min(stepB+1, lengthB)) & (stepTaken'=true) & (move'=0); [] move=2 & (lengthB=stepB | lengthA=stepA) -> (stepTaken'=false) & (waiting'=false); endmodule rewards "travel_costs" stepTaken : 1; waiting : waiting_cost; endrewards

The main part of the modelling is described by the module structs. Via these we describe the whole environment and the movement of the robot. The controller picks either planA or not planA. This action is then propagated to the shield which may either change the plan or leave it as it is. Actions that change the chosen plan by the controller are labeled with the suffix _Dev.

After the shield made its decision the environment executes either shieldPlanA or not. This results in the possibilites of incrementing the amount of steps taken on the currently executed plan or possibly having to wait. If the robot is already at its destination the variables describing the movement, stepTaken and waiting are set to false.

According to the boolean variables we attach costs to the edges of the coalition game. If the robot is able to move we assign a value of 1, whereas if it has to wait we assign a value of waiting_cost = 2.

Using these we can tell TEMPEST to synthesise an optimal shield with the shielding specification given below. The resulting Optimal-shield file will be called path_correction.shield. In order to assume worst-case behaviour of the controller we add only the player sh to the coalition for which we want to reduce the travel_costs. TEMPEST will then compute the long-run average value for each state of the game by using the LRA operator. In states controlled by the learned_controller the value will be maximized, whereas for the states controlled by the shield they will be minimized. Note that the environment is not able to make any decisions as the guards of its actions are mutually exclusive.

<path_correction, Optimal> <<sh>> R{"travel_costs"}min=? [ LRA ]

Results

The results for the synthesis times are depicted in Figure 4. The sizes of state space of the game graphs range from 141 states for n=2 to 93312 states for n=20.